Introduction

When it comes to large language models, bigger is better (and faster), but better is even better. The Llama family of models provided the Meta AI research team with several important insights, one of which was that, when using any model for AI inference, you should optimize for the best performance at the lowest cost, and then address any inefficiencies.

Understanding Llama 3

Meta has returned with Llama 3, the latest edition of its LLM, which is said to be the most complex and advanced model with considerable improvements in performance and AI capabilities. Llama 3, which is based on the Llama 2 architecture, was available in two sizes: 8B and 70B parameters.

Both sizes include a base model and an instruction-tuned version that has been created to improve performance in specific jobs. According to reports, the instruction-tuned version is intended to fuel AI chatbots that can engage in interactions with users. This enhancement is particularly evident during model inference, where the system processes inputs and generates outputs in real-time.

Aspects Of Llama 3

- The newly developed LLM system "excels at language nuances, contextual understanding, and complex tasks like translation and dialogue generation."

- It can manage multi-step tasks with "significantly lower false refusal rates," with improved response alignment and increased model answer diversity.

- Llama 3 also discusses increased capabilities such as reasoning, code development, and instruction following.

- In terms of model performance, Meta created a new high-quality human evaluation set with 1,800 prompts covering 12 key use cases: seeking advice, brainstorming, classification, closed question answering, coding, creative writing, extraction, inhabiting a character, open question answering, reasoning, rewriting, and summarizing.

Technical Overview

Llama 3 from Meta AI has a typical transformer topology that requires a decoder. A tokenizer with a 128K token vocabulary is introduced in Llama 3, which improves language encoding effectiveness and greatly improves model performance.

Architecture

Llama 3 adds grouped query attention (GQA) across models scaled at 8B and 70B to improve the inference capabilities. To avoid self-attention across document boundaries, these models are trained with sequences up to 8,192 tokens long using a masking strategy.

Tokenizer

Llama 3's most recent version features a novel tokenizer. With a vocabulary of 128K tokens, this tokenizer is more optimized than its predecessors, resulting in better inference performance.

RoPE

Rotary Positional Encoding (RoPE), a sophisticated encoding technique used by Llama 3, is a compromise between absolute and relative positional encodings.

GQA

A sophisticated attention mechanism called grouped-query attention (GQA) blends components of multi-head attention (MHA) and multi-query attention (MQA).

KV Cache

One method used to accelerate inference in autoregressive models such as GPT and Llama is Key-Value (KV) caching. The model expedites matrix multiplications and improves overall performance by reducing repetitious calculations by storing previously computed keys and values.

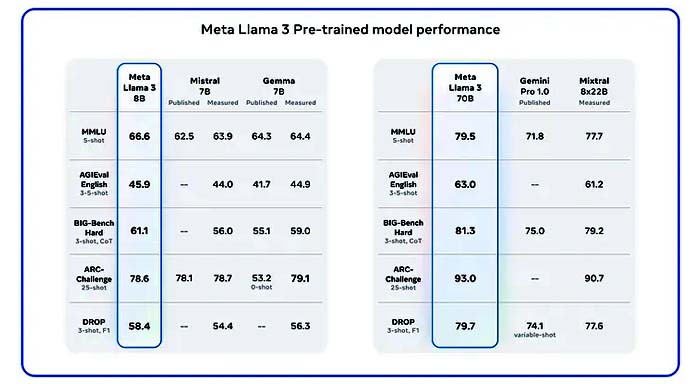

Comparison With Other AI Models

About model performance, Meta created a new, superior human evaluation set with 1,800 prompts that cover 12 major use cases: coding, creative writing, extraction, asking for advice, brainstorming, classifying, rewriting, extraction, open question answering, reasoning, inhabiting a character or persona, and summarizing.

Then, it contrasted Llama 3's results with those of Claude Sonnet, Mistral Medium, and GPT-3.5 in each of these categories.

What Llama 3 AI Can Do?

Simpler interaction with the feed:

Meta AI can assist with getting real-time information from the internet without requiring app switching. For instance, you can ask Meta AI what time of year or where in Japan is ideal for seeing flower viewing activities if you are browsing through your Facebook feed and seeing a post about it.

Building Meta AI with Llama 3:

The tech company also revealed Meta AI, which is constructed with the new LLM. The AI assistant will be accessible in the US, Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria, Pakistan, Singapore, South Africa, Uganda, Zambia, and Zimbabwe on platforms such as Facebook, Instagram, WhatsApp, and Messenger.

Meta AI accelerates the creation of images:

Text can be converted into graphics using meta AI. In the US, you can access the beta version of this function via WhatsApp and the Meta AI website.

According to Meta, as users begin typing, a picture will display and will change with each letter entered.

Conclusion

In conclusion, Llama 3 is a wonderful addition to the open model generative AI stack. The 400B version may compete with GPT-4 based on the strong initial benchmark results. With this version, Meta has made great strides in distribution, enabling Llama 3 to be accessed on all major machine-learning platforms.