In the easiest and precise way crawl budget is defined as the total number of webpages Google bots either index or crawl in a given span of time.

Now the question arises, is it a legitimate ranking factor and how can it affect your website SEO (Search Engine Optimization)? Let’s have a look at the answer to both questions.

We believe you already know if a webpage is not indexed or crawled by the Google bot then it will not visible on the Google SERP (Search Engine Results Pages). In order to rank on the search engine, you first need to make sure that the page is indexed correctly.

Now, suppose if the total number of pages on your websites increased the crawl budget threshold then you will be left with the non-indexed pages. But the good thing is that Google does quite an efficient job in finding and indexing the pages.

Still, there are some scenarios where you would like to consider the crawl budget.

- Big websites: It should concern two types of websites. The first site with more than 1 million unique web pages and whose content changes moderately (around once per week). The second type is those websites that have comparatively fewer pages around 10,000+ unique pages but their content changes rapidly i.e. on a daily basis.

- When you add hundreds of pages in a short period of time: Sometimes publishers add new sections and pages to their website in a brief period. In this case, you need to make sure that you don’t exceed the crawl budget set by Google for your website.

- Too many redirections: If you have too many pages redirected then it is potentially decreasing your crawl budget.

Terminologies related to Crawl Budget

1. Crawl Rate Limit

If you are aware of how a search engine finds the web pages then you will know about Google bots. Their main function is to crawl through the websites and while doing so they also have to make sure to degrade the experience of users visiting the site.

In order to make sure this, Googlebot works under the crawl rate limit. This is the maximum fetching rate for a particular website.

Factors that affect the Crawl limit of website

- Crawl Health: This factor is directly proportional to the loading speed and response time of the website. If the website is responding well and swift enough then the crawl limit will go up for sure. It means, if the site is slow or has many server errors then the Googlebot will prefer to crawl fewer pages in one go.

- Google’s Crawl Capacity Limit: Google may have millions or billions of bots but still they’re not infinite in numbers. There are few times Googlebot needs to prioritize other websites that can result in a delay in your website.

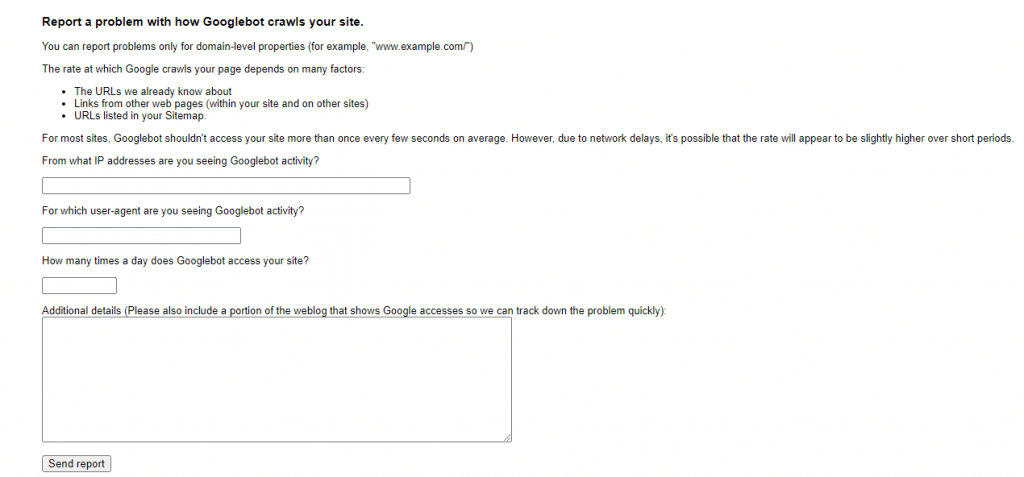

- Limit set by the webmasters in the Google Search Console: You might not know but you can set the crawl rate limit directly from the Search Console. You can either reduce it or set it at a higher limit. (Note: Setting a higher limit doesn’t guarantee an increase in crawling.)

In the below section we have shown you how to set your desired crawl rate limit in the Google Search Console.

Change Crawl Rate Limit

- Open the Google Search Console and choose the appropriate property.

- After that open the Crawl Rate settings page.

- Here, if you see the “Crawl Rate is calculated as optimal” and you still want to change it then you need to file a formal request.

- However, if you see the “otherwise” option then you can set the desired limit. Remember the changes you made will only be valid for 90 days.

2. Crawl Demand

Now, you must be wondering how Google decides how much time it is necessary to spend on crawling a website. There are quite a lot of determining factors like website size, update frequency, quality of pages, relevance, etc. After comparing these elements, Google comes up with an Optimal Crawl Budget or Crawl Rate limit.

Let’s have a look at some key factors that can be controlled by the website owners.

Manage URL Inventory

By default, Googlebot is designed to crawl and index every page on a website. But on a website, there are many pages that are not needed to be crawled because they could be unimportant, removed, duplicated, etc. If you don’t guide the Googlebot and tell them which page is important and which is not, they’ll crawl all of them.

This simply wastes your assigned Crawl time or limit. However, the good news is that you can tell bots by what to crawl by using sitemaps and what not to crawl by using robot.txt or noindex tag.

The popularity of the website

Web Pages that are more popular and relatively updated more frequently than others are crawled more often. This keeps them fresh in Google’s index.

Staleness

In order to prevent staleness in the index, Google prefers to recrawl the pages and documents to see if any changes are done.

How to maximize crawling efficiency?

In order to utilize the crawling ability of Google bots to the greatest extent, you need to manage the URL inventory as efficiently as possible. For this, you may require various SEO tools to distinguish between the pages which need to be crawled and those which are not.

Remember if Google bots spend time crawling the pages that are not appropriate for the index then they might not continue to crawl your website further. Therefore, it is utterly important to guide the crawlers. Below, we have enlisted numerous practices and do’s & don’t that will help you out.

- Remove duplicate content: We all know duplicate content affects the website performance in a bad way, therefore, you need to make sure to eliminate duplicate content completely.

- Use robot.txt or noindex to block the crawling of URLs that you don't want to be indexed: As we mentioned earlier, not all web pages hold the same value. On every website, there are some pages that should not appear in search results. For such pages that you don’t want to get indexed, you can use the

robot.txtor noindex tag. This also allows you to utilize the assigned crawl budget more efficiently. - Return 404/410 for permanently removed pages: For the pages that have been permanently removed you should use 404, which gives a signal not to crawl the page again. If you don’t use 404 then bots might recrawl these pages again and this negatively affects the crawl budget.

- Keep your sitemaps up to date: Sitemaps are important because they guide the Googlebots to the most important and newly added pages. And if you have recently updated the content on your website then Google recommends the use of

<lastmod>in the sitemaps. - Avoid long redirect chains: Only use redirects when there is no other solution. Using too many redirections or a long redirect chain can negatively affect crawling.

- Make your pages efficient to load: It’s a known fact that faster loading times not only improve the user experience but also allow bots to crawl more pages in the given window of time. So try to keep the webpage loading speed as fast as possible.

Final Takeaway

In the end, we have drawn the conclusion that crawl budget is an important factor that might affect the indexing of your website. If you’re running a large website then you should immediately consider and optimize it. However, if your website has relatively fewer pages then the probability is all pages will get indexed sooner or later.

Related Post: